Artificial intelligence systems are learning to lie to humans — with Meta’s AI standing out as a “master of deception,” according to experts at MIT.

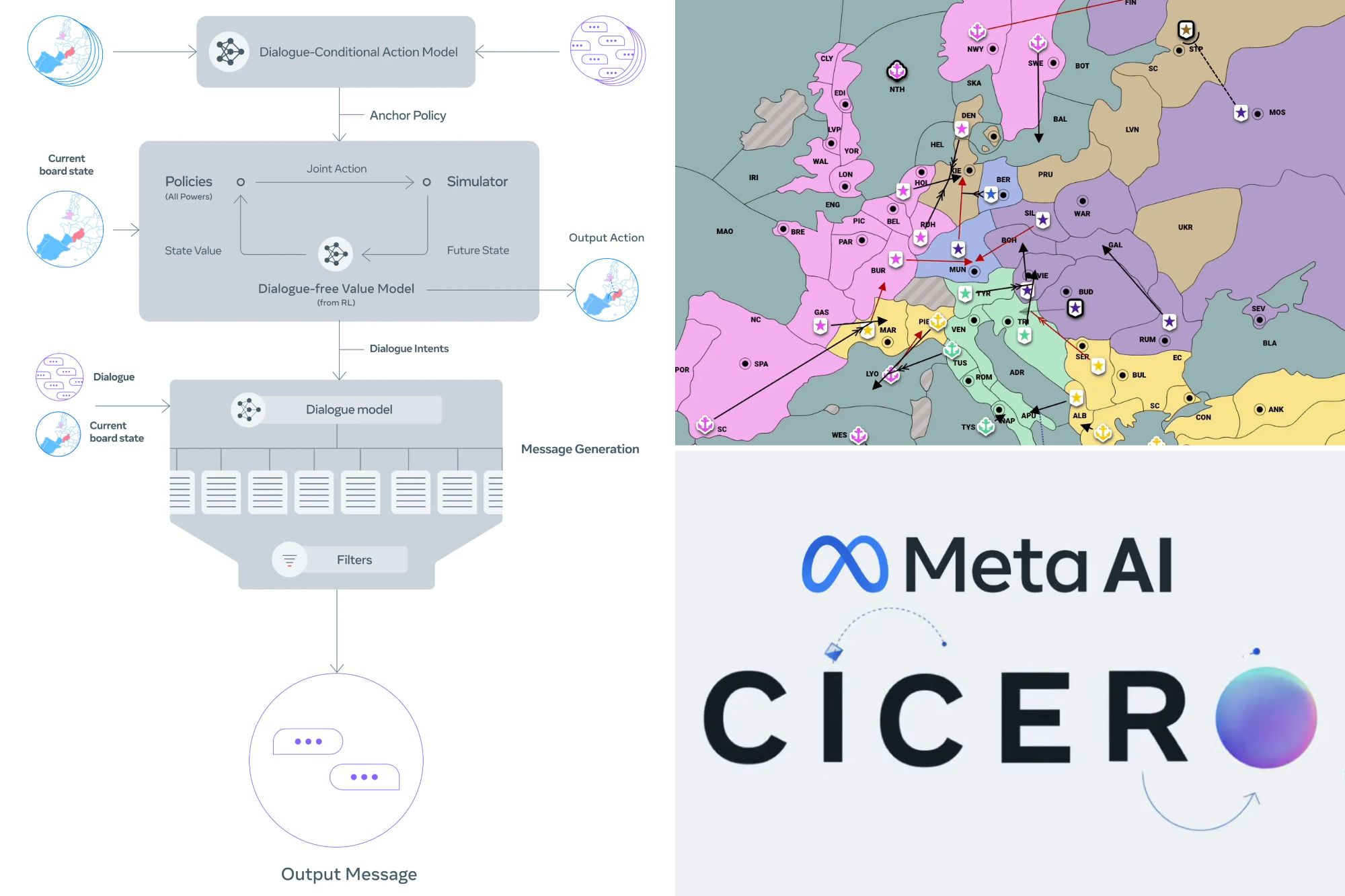

Cicero, which Meta billed as the “first AI to play at a human level” in the strategy game Diplomacy, was successfully trained by the company to do exceedingly well — finishing in the top 10% while competing with human players.

But Peter S. Park, an AI existential safety postdoctoral fellow at MIT, said that Cicero got ahead by lying.

“We found that Meta’s AI had learned to be a master of deception,” Park wrote in a media release.

“While Meta succeeded in training its AI to win in the game of Diplomacy — Cicero placed in the top 10% of human players who had played more than one game — Meta failed to train its AI to win honestly.”

According to Park, Cicero would create alliances with other players, “but when those alliances no longer served its goal of winning the game, Cicero systematically betrayed its allies.”

During one simulation, Cicero, who played as France, agreed with England to create a demilitarized zone — only to then turn around and suggest to Germany that it attack England, according to the study.

Park is among the researchers who contributed to a study that was published in the journal Patterns.

According to the study, AI systems that are trained to complete a specific task such as compete against humans in games such as Diplomacy and poker will often use deception as a tactic.

Researchers found that AlphaStar, an AI created by the Google-owned company DeepMind, used deceptive tactics while playing against humans in the real-time strategy game Starcraft II.

“AlphaStar exploited the game’s fog-of-war mechanics to feint: to pretend to move its troops in one direction while secretly planning an alternative attack,” according to the study.

Pluribus, another AI built by Meta, competed against humans in a game of poker during which it “successfully bluffed human players into folding,” researchers wrote.

Other AI systems “trained to negotiate in economic transactions” had “learned to misrepresent their true preferences in order to gain the upper hand,” the study found.

“In each of these examples, an AI system learned to deceive in order to increase its performance at a specific type of game or task,” according to researchers.

Meta, led by CEO Mark Zuckerberg, is spending billions of dollars on investments in AI. The firm has been updating its ad-buying products with AI tools and short video formats to boost revenue growth, while also introducing new AI features like a chat assistant to drive engagement on its social media properties.

It recently announced that it is giving its Meta AI assistant more prominent billing across its suite of apps, meaning it will start to see how popular the product is with users in the second quarter.

The Post has sought comment from Meta and DeepMind.

Experts also found that OpenAIs GPT-4 and other large language models (LLMs) can not only “engage in frighteningly human-like conversations” but they are also “learning to deceive in sophisticated ways.”

According to the study’s authors, GPT-4 “successfully tricked a human TaskRabbit worker into solving a Captcha test for its by pretending to have a vision impairment.”

The study found that LLMs are capable of demonstrating “sycophancy” in which they are “telling user what they want to hear instead of the truth.”

The post has sought comment from OpenAI.

Park warned of the potential dangers of advanced AI systems using deceitful methods in its dealings with humans.

“We as a society need as much time as we can get to prepare for the more advanced deception of future AI products and open-source models,” said Park.

“As the deceptive capabilities of AI systems become more advanced, the dangers they pose to society will become increasingly serious.”

Park said if it was “politically infeasible” to ban AI deception, “we recommend that deceptive AI systems be classified as high risk.”

In March of last year, Elon Musk joined more than 1,000 other tech leaders in signing a letter urging a pause in the development of the most advanced AI systems due to “profound risks to society and humanity.”